Modern Reference Architecture Deployed On AWS

Reference Architecture Infrastructure Overview

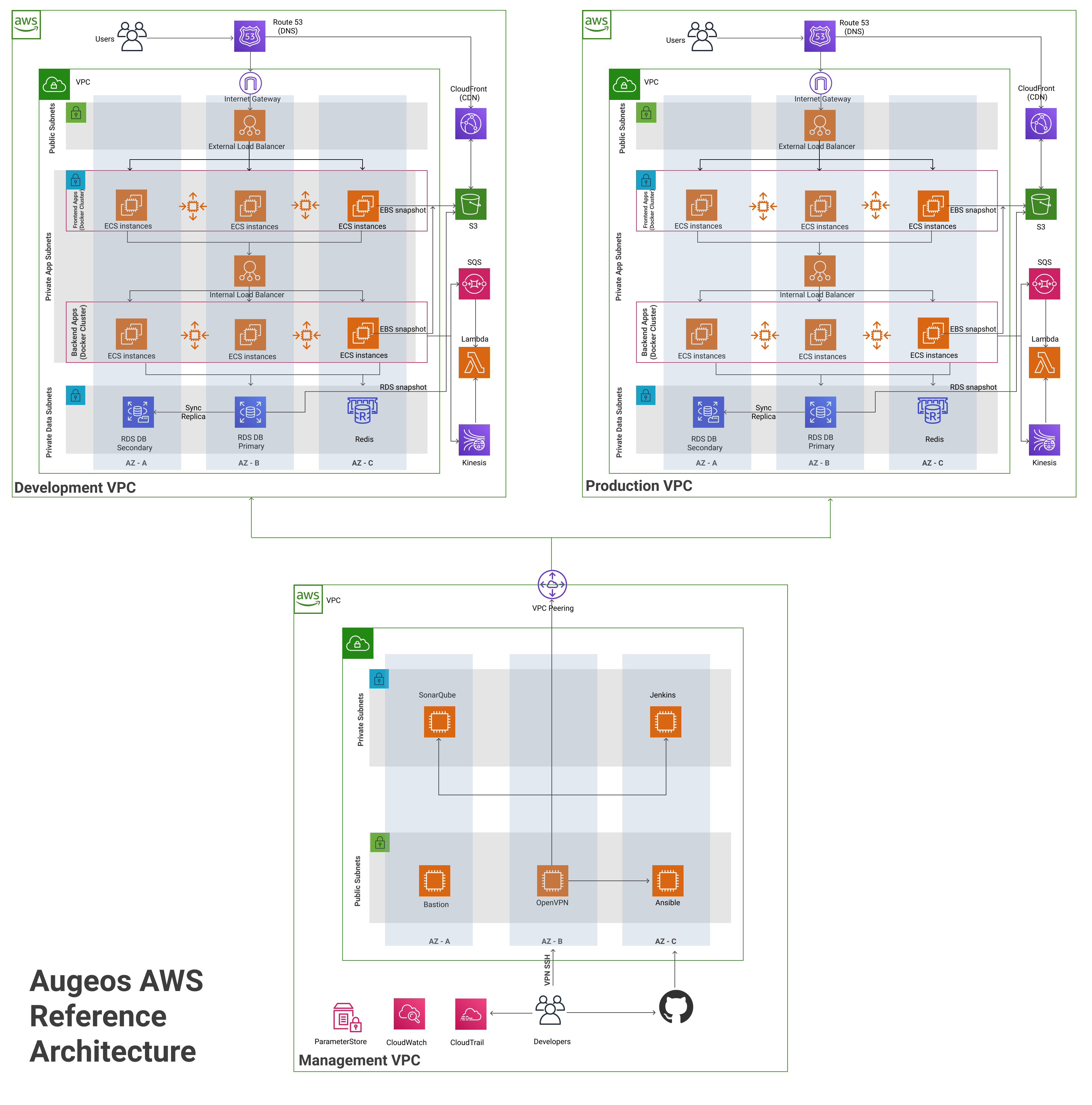

This reference architecture deployed on top of Amazon Web Services (AWS) is an end-to-end tech stack built using Infrastructure as Code (IaC).

It is based on best practices described in Web Application Hosting in the AWS Cloud white paper and customers requirements that runs on highly available and scalable mode.

The Reference Architecture is highly customisable, so what’s deployed may be a bit different from what is in the diagram. Here is an overview of what is actually deployed:

- Infrastructure as code

- Environments

- AWS accounts

- VPCs and subnets

- Load balancers

- Docker clusters (ECS)

- Data stores

- OpenVPN server

- CircleCI

- Monitoring, log aggregation, alerting

- DNS and TLS

- Static content, S3, and CloudFront

- Lambda

- Security

Infrastructure as code

The infrastructure is managed as code, primarily using Terraform. That is, instead of clicking around a web UI or SSHing to a server and manually executing commands, the idea behind infrastructure as code (IaC) is that you write code to define your infrastructure and you let an automated tool (e.g., Terraform) apply the code changes to your infrastructure. This has a number of benefits:

-

You can automate your entire provisioning and deployment process, which makes it much faster and more reliable than any manual process.

-

You can represent the state of your infrastructure in source files that anyone can read rather than a sysadmin’s head.

-

You can store those source files in version control, which means the entire history of your infrastructure is captured in the commit log, which you can use to debug problems, and if necessary, roll back to older versions.

-

You can validate each infrastructure change through code reviews and automated tests.

-

You can package your infrastructure as reusable, documented, battle-tested modules that make it easier to scale and evolve your infrastructure.

Environments

The infrastructure is deployed across multiple environments:

-

dev (account id): Sandbox environment.

-

prod (account id): Production environment.

-

security (account id): All IAM users and permissions are defined in this account.

-

shared-services (account id): DevOps tooling, such as the OpenVPN server.

-

stage (account id): Pre-production environment.

AWS accounts

The infrastructure is deployed across multiple AWS accounts. For example, the development environment is in one account, the production environment in another account, the DevOps tooling in yet another account, and so on. This gives you better isolation between environments so that if you break something in one environment (e.g., staging)—or worse yet, a hacker breaks into that environment—it should have no effect on your other environments (e.g., prod). It also gives you better control over what resources each employee can access. This concept is known as defense in depth.

VPCs and subnets

Each environment lives in a separate Virtual Private Cloud (VPC), which is a logically isolated section within an AWS account. Each VPC defines a virtual network, with its own IP address space and rules for what can go in and out of that network. The IP addresses within each VPC are further divided into multiple subnets, where each subnet controls the routing for its IP address.

- Public subnets are directly accessible from the public Internet.

- Private subnets are only accessible from within the VPC.

Just about everything in this infrastructure is deployed in private subnets to reduce the surface area to attackers. The only exceptions are load balancers and the OpenVPN server, both of which are described below.

Load balancers

Traffic from the public Internet (e.g., requests from your users) initially goes to a public load balancer, which proxies the traffic to the apps apis. This allows you to run multiple copies of application for scalability and high availability. The load balancers being used are:

- Application Load Balancer (ALB): The ALB is a load balancer managed by AWS that is designed for routing HTTP and HTTPS traffic. The advantage of using a managed service is that AWS takes care of fault tolerance, security, and scaling the load balancer for you automatically.

We also deploy an internal load balancer in the private subnets. This load balancer is not accessible to the public. Instead, it’s used as a simple way to do service discovery: every backend service registers with the load balancer at a particular path, and all services know to send requests to this load balancer to talk to other services.

Docker clusters

The application code is packaged into Docker containers and deployed across an Amazon EC2 Container Service (ECS) cluster. The advantage of Docker is that it allows you to package your code so that it runs exactly the same way in all environments (dev, stage, prod). The advantage of a Docker Cluster is that it makes it easy to deploy your Docker containers across a cluster of servers, making efficient use of wherever resources are available. Moreover, ECS can automatically scale your app up and down in response to load and redeploy containers that crashed.

For a quick intro to Docker, see Running microservices on AWS using Docker, Terraform, and ECS.

Data stores

The infrastructure includes the following data stores:

-

Postgres: Postgres is deployed using Amazon’s Relational Database Service (RDS), including automatic failover, backups, and replicas.

-

Memcached: Memcached is deployed using Amazon’s ElastiCache Service, including automatic failover, backups, and replicas.

Lambda

We have deployed several example Lambda functions to show how you can build serverless applications.

OpenVPN server

To reduce your surface area to attackers, just about all of the resources in this infrastructure run in private subnets, which are not accessible from the public Internet at all. To allow developers access to these private resources, we expose a single server publicly: an OpenVPN server. Once you connect to the server using a VPN client, you are “in the network”, and will be able to access the private resources (e.g., you will be able to SSH to your EC2 Instances).

CircleCI

We have set up CircleCi as a Continuous Integration (CI) server. After every commit, a CircleCi job runs your build, tests, packaging, and automated deployment steps.

Monitoring, log aggregation, alerting

You can find metrics, log files from all your servers, and subscribe to alert notifications using Amazon CloudWatch.

DNS and TLS

Amazon Route 53 is used to configure DNS entries for all your services. We have configured SSL/TLS certificates for domain names using Amazon’s Certificate Manager (ACM), which issues certificates that are free and renew automatically.

Static content, S3, and CloudFront

All static content (e.g., images, CSS, JS) is stored in Amazon S3 and served via the CloudFront CDN. This allows you to offload all the work of serving static content from your app server and reduces latency for your users.

Security

Security best practices is built in every aspect of this infrastructure:

-

Network security

-

Server access

-

Application secrets

-

User accounts

-

Auditing

-

Intrusion detection

-

Security updates

-

OS hardening

-

End-to-end encryption

Leave a Comment